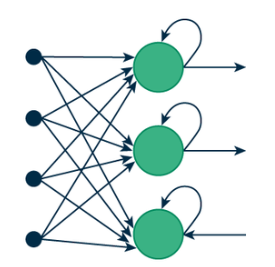

Recurrent Neural Networks (RNNs) are a class of artificial neural networks designed to recognize patterns in sequences of data, such as time series, speech, text, financial data, and more. Unlike traditional feedforward neural networks, RNNs have connections that form directed cycles, allowing information to persist. Their ability to maintain context and handle temporal dependencies makes them invaluable for a wide range of applications, from natural language processing to time series prediction.

The primary difference between RNNs and other types of neural networks lies in the presence of loops in the architecture. These loops enable the network to maintain a “memory” of previous inputs, making them well-suited for sequential data.

Types of RNNs

There are several types of RNNs, each designed to address specific challenges and improve performance for particular tasks:

1. Vanilla RNNs

Vanilla RNNs are the simplest form of RNNs, as described in the basic structure. They are effective for short sequences but struggle with long-term dependencies due to issues like the vanishing gradient problem.

2. Long Short-Term Memory (LSTM) Networks

LSTM networks are designed to handle long-term dependencies more effectively. They introduce three gates (input, forget, and output) that control the flow of information, allowing the network to retain important information over longer periods and forget irrelevant data. This makes LSTMs particularly useful for tasks like language modeling and time series prediction.

3. Gated Recurrent Units (GRUs)

GRUs are a variant of LSTMs that combine the forget and input gates into a single update gate, simplifying the architecture while retaining the ability to capture long-term dependencies. GRUs often perform as well as LSTMs but with fewer parameters, making them computationally more efficient.

4. Bidirectional RNNs

Bidirectional RNNs process data in both forward and backward directions, allowing the network to have access to both past and future context at each time step. This is particularly useful for tasks where understanding the entire sequence is important, such as named entity recognition and machine translation.

5. Attention Mechanisms and Transformers

While not RNNs per se, attention mechanisms have been integrated into RNNs to improve their ability to focus on relevant parts of the input sequence. The transformer architecture, which relies entirely on self-attention mechanisms, has largely replaced RNNs for many NLP tasks due to its superior performance.

Use Cases of RNNs

RNNs are highly versatile and find applications in various domains, particularly in natural language processing (NLP). Here are some notable use cases:

1. Language Modeling and Text Generation

RNNs are used to predict the next word in a sequence, which is essential for applications like autocomplete, text generation, and machine translation. They learn to capture the context of the input text and generate coherent and contextually appropriate sentences.

2. Speech Recognition

In speech recognition, RNNs process audio signals over time to convert spoken language into text. Their ability to handle temporal dependencies makes them ideal for recognizing patterns in continuous speech.

3. Sentiment Analysis

RNNs can analyze the sentiment of a given text by considering the sequence of words. This is useful for tasks like social media monitoring, customer feedback analysis, and opinion mining.

4. Machine Translation

RNNs, particularly in the form of encoder-decoder architectures, are used for translating text from one language to another. They encode the source sentence into a fixed-length vector and then decode it into the target language.

5. Time Series Prediction

RNNs are employed in predicting future values in a time series based on historical data. This has applications in stock price prediction, weather forecasting, and sales forecasting.

While RNNs are powerful, they suffer from limitations like the vanishing gradient problem, which makes training difficult for long sequences. To address these issues, variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) have been developed. These architectures introduce gates that regulate the flow of information, enabling the network to retain relevant information over longer time spans.